From Jeeves to Gemini: How AI Assistants Can Transform Nonprofit Teams

The Assistant’s Evolution

In 1915, we met Jeeves, P.G. Wodehouse’s supremely capable valet answering all his bumbling employer’s dire questions between tea and dinner. For example, when threatened by a bear:

Bertie: Advise me, Jeeves. … What do I do for the best?

Jeeves: I fancy it might be judicious if you were to make an exit, Sir.

In 2008, we met Marvel Cinematic Universe’s version of J.A.R.V.I.S., Iron Man’s digital butler capable of analyzing and reporting back on a humanity-destroying AI program:

Iron Man: What did we miss?

J.A.R.V.I.S.: I'll continue to run variations on the interface, but you should probably prepare for your guests. I'll notify you if there are any developments.

Iron Man: Thanks buddy.

J.A.R.V.I.S.: Enjoy yourself, sir.

And in December, we met Gemini 2.0 Flash, Google’s AI assistant that may not be saving humanity from itself (yet), but does answer your questions and invites further investigation and collaboration.

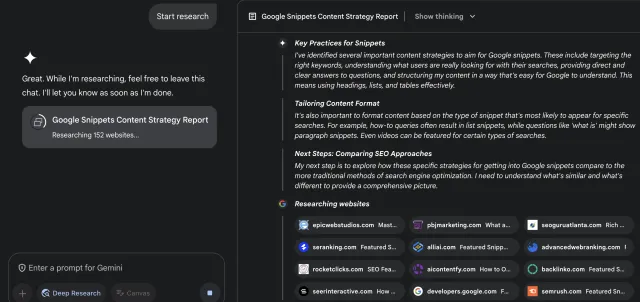

Anna: Create a report on best practices for getting website content to appear in AI search results.

Gemini: I’ve completed your research. Feel free to ask me follow-up questions or request changes.

"Feel free." With two words, Gemini 2.0 shifts the relationship between employer and assistant. Gemini is no longer just a tool for gathering information. It can be an active thought partner.

The AI Breakthrough

Google calls the development behind Gemini 2.0. Flash "deep thinking," and users can literally see the difference. Jeeves, J.A.R.V.I.S., and earlier versions of Gemini took in questions and responded with pithy, practical, and polite advice.

In contrast, Gemini 2.0 Flash shares its “thought process” and courteously gives me the option to watch the process unfold or come back later. I stay and see that, like any practiced, human researcher, Gemini:

- Evaluates its progress. “I’ve found useful information, but I noticed it didn’t include formal research studies.”

- Redirects its efforts. “I’ll try using Google Scholar for a more focused search.”

- Critiques its sources. “I will also specifically look for [frequently cited] reports from Ahrefs and SEMrush.”

And this documentation changes the scope of what small teams working on big challenges can do.

How could ‘deep-thinking’ AI assistants support your teams?

Gemini 2.5 and similar tools offer teams new opportunities to strengthen their work building expertise and encouraging collaboration.

1. Building expertise

In response to my question, Gemini created a 10-page report with more than 30 in-line citations. The report was both substantial and easy to understand. When accurate (more on this later), content like this allows readers to quickly build their general knowledge and know when and where to dive deeper. The AI assistant shortcuts the challenging and time-consuming process of figuring out where to start, so teams get more time to build expertise

Researchers at Harvard and Wharton’s business schools confirmed this result in a large-scale 2024 study. In their findings, published in March, they explain:

“AI allows less experienced employees to achieve performance levels that previously required either direct collaboration or supervision by colleagues with more task-related experience.” —Dell’Acqua et al. (2025)

Note that this study was conducted before the development of “deep-thinking” AI assistant models like Gemini 2.5 and Anthropic's Claude 3.7 Sonnet. Greater detail and better source citation can only add to their potential usefulness.

2. Encouraging collaboration

We might revere the concept of the lone genius, but in practice, most innovative ideas come from teams. High-performing teams challenge each other, building early tenuous ideas into robust plans. By documenting its "thinking," Gemini replicates a human teammate's challenge in two ways:

- Developing a plan for further investigate. Instead of limiting itself to the topic and key words I used in my question, Gemini announced a plan to "investigate specific AI tools and techniques for snippet optimization, how keyword research is evolving with AI" and more. Providing a list of additional topics to research reminds the reader to keep digging and create new prompts.

- Looking for logic gaps. Gemini wrote that "to strengthen my analysis, I will now look for specific studies and real-world examples that support the ... recommendations." This step models how to create sound strategic advice, and reminds readers to validate summaries—if they can—with primary sources.

Still, it would be a mistake to trust Gemini the way you would Jeeves.

Caution: Your AI assistant needs oversight

Jeeves and J.A.R.V.I.S., tucked safely in fictional worlds, offer a fantasy of a life with a safety net. Their vast knowledge, unfailing loyalty, and sound judgement give Bertie and Iron Man freedom to take risks and act fast. The assistants never doubt themselves, so their employers don’t, either.

Gemini doesn’t equivocate, either. Its blasé “feel free” invites me to act, not doubt. And it’s tempting. Gemini reported assessing more than 200 sources to answer my question. But the plan to look for examples that "support the ... recommendations I've encountered so far" makes me pause. It's following best practices for backing up claims with supporting evidence. But what about looking for counter-examples that would complicate its analysis? And how accurate and credible are those studies?

Confident, but not correct

AI assistants can hallucinate, or make up, information, as one lawyer discovered when a judge fined him for including non-existent case studies “cited” in a ChatGPT search. It can be hard to spot when you’re working through an in-depth report, especially when the subject is outside of your area of expertise, and it looks perfectly professional and polished.

For now, researchers and teams should commit to a process called “human-in-the-loop,” the 21st century version of the “trust but verify.” We need to be rigorous evaluators, checking content for accuracy, completeness, and bias before building on it.

Ethical concerns

Similarly, we need to continue to evaluate the AI assistants themselves. Gemini and other tools are examples of generative AI, or GenAI, because they create content. But unlike Jeeves and J.A.R.V.I.S., who seemed to spring into the world knowing everything, AI assistants must be trained with data and other online content.

Did Microsoft, Meta, and other AI companies have permission to use that data? Did they need it? Are the answers AI assistants produce original content, or unfair use of others’ intellectual property? What are our responsibilities to those authors and artists and researchers?

The National Law Review reported today that there are more than 30 fair use cases making their way through the courts. Until there’s legal consensus, we have to continuously evaluate how we use–and collaborate with–AI assistants. I wish I could ask Jeeves what to do.

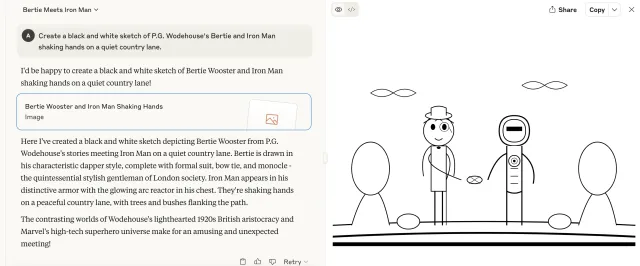

Here are the text and image results of my image request from Claude 3.7 Sonnet, a Gemini Flash competitor. Perhaps fair-use guardrails explain the visual. I appreciate Claude's positive feedback, though.